NVIDIA officially introduces new NVIDIA In- Game Inferencing (NVIGI) SDK for developers; integrates AI inference directly into C++ games and applications

NVIDIA has today officially introduced NVIDIA In-Game Inferencing (NVIGI) SDK- a unified interface for developers to orchestrate the deployment of AI models from the cloud to local PC execution environments.

🎉 Introducing NVIDIA In-Game Inferencing (NVIGI) SDK.

Game developers can now use NVIGI with ACE to enable seamless integration of AI inference into C++ games and applications for optimal performance and latency.https://t.co/hJ0Lv5esAq

— NVIDIAGameDev (@NVIDIAGameDev) February 20, 2025

NVIGI SDK enables you to integrate AI inference directly into C++ games and applications for optimal performance and latency. Developers can bring NVIDIA ACE AI Characters to games with NVIGI SDK.

NVIDIA In-Game Inferencing SDK

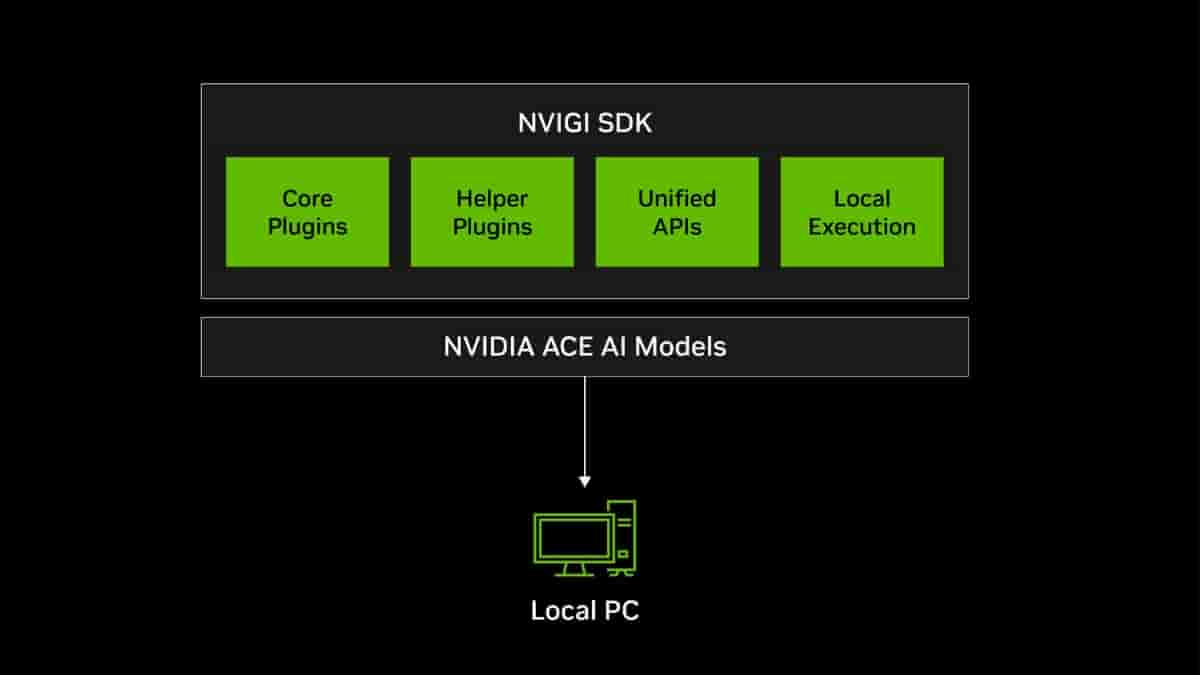

This is a GPU-optimized, plugin-based inference manager designed to simplify the integration of ACE models into gaming and interactive applications. It offers the following functionality-

- Plugin flexibility: Add, update, and manage AI plugins (ASR, language models, embeddings) with ease.

- Windows-native DLLs: Streamline workflows for C++ games and applications.

- GPU optimization: Use compute-in-graphics (CIG) technology for efficient AI inference alongside rendering tasks.

By combining NVIGI with ACE, you can create autonomous characters with advanced generative AI capabilities, such as real-time NPC dialogue, contextual memory, and lifelike animation. NVIGIA architecture enables flexible integration of various AI functionalities- Core plugins, helper plugins, Unified APIs, and local and cloud execution. It orchestrates an agentic workflow, enabling characters to listen, reason, speak, and animate in real time.

To get started with ACE on-device inference, users need to initialize NVIGI, load plugins, and models, create runtime configuration and inference, enable GPU scheduling and rendering integration, and run inference. To learn about the detailed process, click on this link.

List of available NVIGI plugins

| NVIGI Plugin | Supported Inference Hardware | Supported Models |

| Speech – ASR Local GGML | CUDA-Enabled GPU or CPU | Whisper ASR |

| Speech – ASR Local TRT | CUDA-Enabled GPU | NVIDIA RIVA ASR (coming soon) |

| Language – GPT Local ONNX DML | ONNX-supported GPU or CPU | Mistral-7B-Instruct |

| Language- GPT Local GGML | CUDA-Enabled GPU or CPU | Llama-3.2-3b Instruct ,Nemotron-Mini-4B-Instruct, Mistral-Nemo-Minitron-2B-128k-Instruct , Mistral-Nemo-Minitron-4B-128k-Instruct, Mistral-Nemo-Minitron-8B-128k-Instruct, Nemovision-4B-Instruct |

| RAG – Embed Local GGML | CUDA-Enabled GPU or CPU | E5 Large Unsupervised |