Google introduces Gemma 3 as its latest open model for developers

Back in May 2024, Google introduced Gemma 2 open model and now today the company has introduced its latest open model for developers- Gemma 3, a collection of lightweight, state-of-the-art open models built from the same research and technology that powers Gemini 2.0 models. It comes in a range of sizes- 1B, 4B, 12B and 27B.

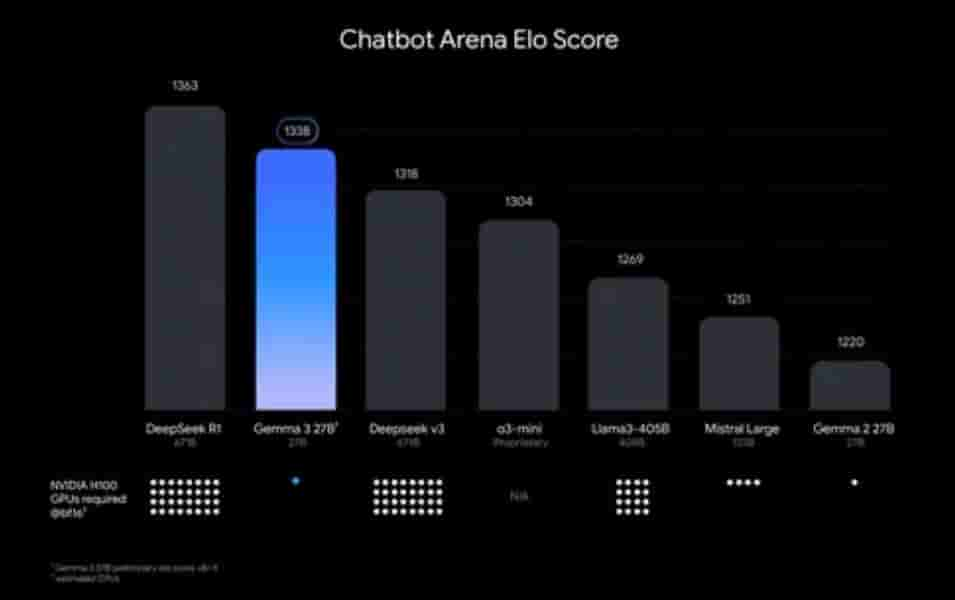

Google has also shared a chart that ranks AI models by Chatbot Arena Elo scores. As per which Gemma 3 27B which required only a single GPU, outperforms Deepseek V3, o3-mini, and Llama 3- 405B models.

New capabilities developers can use with Gemma 3

- Build with the world’s best single-accelerator model: Gemma 3 delivers state-of-the-art performance for its size, outperforming Llama-405B, DeepSeek-V3, and o3-mini in preliminary human preference evaluations on LMArena’s leaderboard. This helps you to create engaging user experiences that can fit on a single GPU or TPU host.

- Go global in 140 languages: Build applications that speak your customers’ language. Gemma 3 offers out-of-the-box support for over 35 languages and pre trained support for over 140 languages.

- Create AI with advanced text and visual reasoning capabilities: Easily build applications that analyze images, text, and short videos, opening up new possibilities for interactive and intelligent applications.

- Handle complex tasks with an expanded context window: Gemma 3 offers a 128k-token context window to let your applications process and understand vast amounts of information.

- Create AI-driven workflows using function calling: Gemma 3 supports function calling and structured output to help you automate tasks and build agentic experiences.

- The high performance is delivered faster with quantized models: Gemma 3 introduces official quantized versions, reducing the model size and computational requirements while maintaining high accuracy.

Gemma 3’s development is said to include extensive data governance, alignment with safety policies via fine-tuning, and robust benchmark solutions. For safety, there is a powerful 4B image safety checker called ShieldGemma 2. Developers can further customize ShieldGemma for their safety needs and users.

Gemma 3 and ShieldGemma 2 integrate seamlessly into your existing workflows-

- Develop with your favorite tools: With support for Hugging Face Transformers, Ollama, JAX, Keras, PyTorch, Google AI Edge, UnSloth, vLLM, and Gemma.cpp, you have the flexibility to choose the best tools for your project.

- Start experimenting in seconds: Get instant access to Gemma 3 and begin building right away. Explore its full potential in Google AI Studio, or download the models through Kaggle or Hugging Face.

- Customize Gemma 3 to your specific needs: Gemma 3 ships with a revamped codebase that includes recipes for efficient fine-tuning and inference. Train and adapt the model using your preferred platform, like Google Colab, Vertex AI, or even your gaming GPU.

- Deploy your way: Gemma 3 offers multiple deployment options, including Vertex AI, Cloud Run, the Google GenAI API, Iocal environments, and other platforms, giving you the flexibility to choose the best fit for your application and infrastructure.

- Experience optimized performance on NVIDIA GPUs: NVIDIA has directly optimized Gemma 3 models to ensure that you get maximum performance on GPUs of any size, from Jetson Nano to the latest Blackwell chips. Gemma 3 is now featured on the NVIDIA API Catalog, enabling rapid prototyping with just an API call.

- Accelerate your AI development across many hardware platforms: Gemma 3 is also optimized for Google Cloud TPUs and integrates with AMD GPUs via the open-source ROCm™ stack. For CPU execution, Gemma.cpp offers a direct solution.

To promote academic research breakthroughs, Google has launched the Gemma 3 Academic Program. Academic researchers can apply for Google Cloud credits (worth $10,000 per award) to accelerate their Gemma 3-based research.

Get started with Gemma 3

Instant exploration:

- Try Gemma 3 at full precision directly in your browser – no setup needed – with Google AI Studio.

- Get an API key directly from Google AI Studio and use Gemma 3 with the Google GenAI SDK.

Customize and build:

- Download Gemma 3 models from Hugging Face, Ollama, or Kaggle.

- Easily fine-tune and adapt the model to your unique requirements with Hugging Face’s Transformers library or your preferred development environment.

Deploy and scale:

- Bring your custom Gemma 3 creations to market at scale with Vertex AI.

- Run inference on Cloud Run with Ollama.

- Get started with NVIDIA NIMs in the NVIDIA API Catalog.