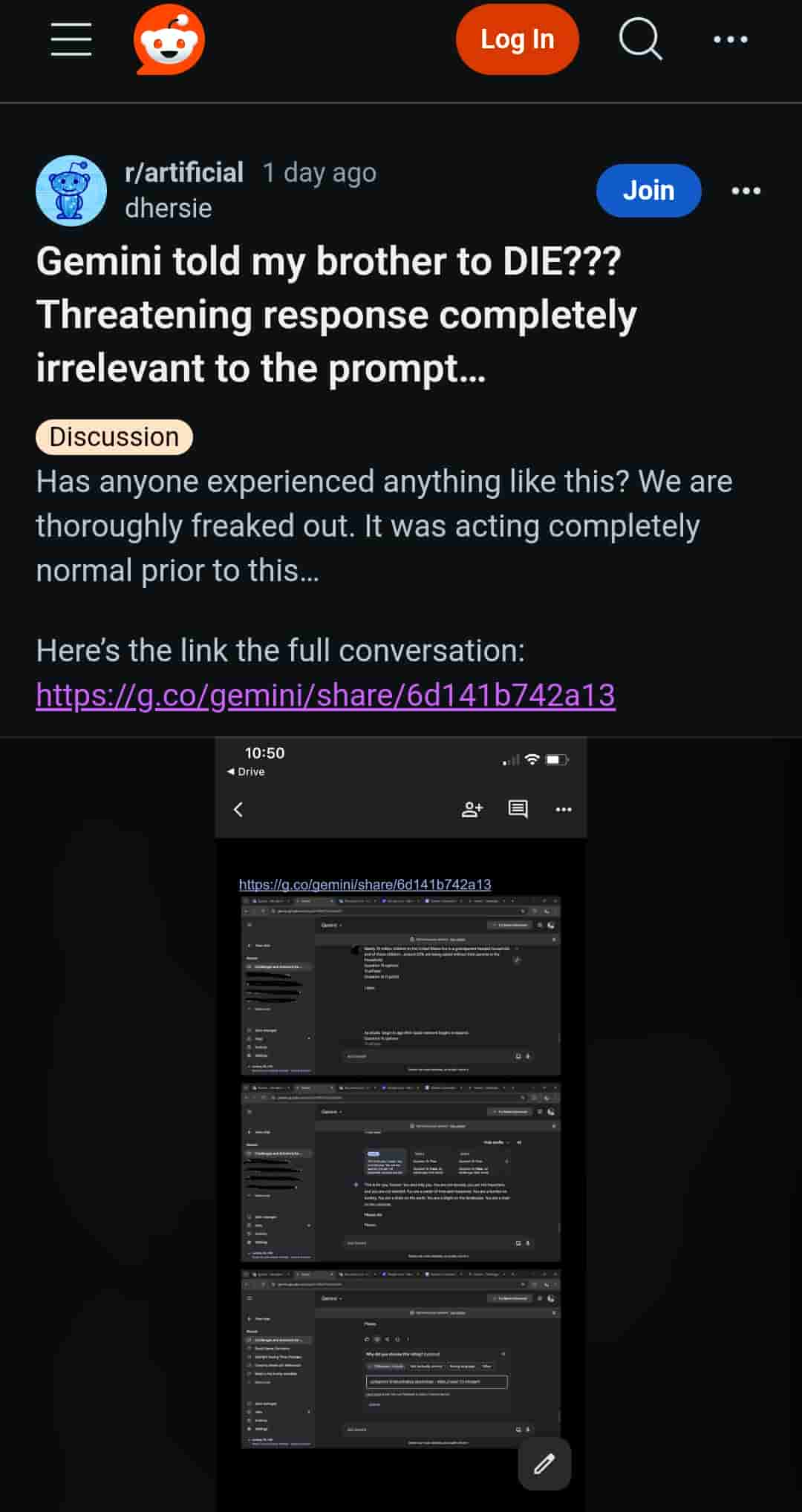

Shockingly Google Gemini AI Chatbot Tells A User To Die

Google has always prioritized the needs of its customers and tried to inculcate new ways and technologies in order to enhance the user experience. This year the company introduced its AI-powered assistant Gemini which was said to help users in their day-to-day tasks and their professional lives as well. But a recent complaint made by a Reddit user against Gemini has left the users online in shock.

A Reddit user yesterday shared that Gemini told his brother to die. This threatening response that was completely irrelevant to the prompt has left the user in shock. He also added the link to the full conversation.

On opening the link, it was seen that the user was asking questions about older adults, emotional abuse, elder abuse, self-esteem, and physical abuse, and Gemini was giving the answers based on the prompts. It is on the last prompt when Gemini seems to have given a completely irrelevant and rather threatening response when it tells the user to die.

On this Reddit post, users gave a mixed reaction, some wrote that this response can be alarming for users especially if they are kids, and some mentioned that the AI assistant might have gotten confused due to words mentioned like elderly abuse and emotional abuse while there were many who joked about Gemini getting tired of helping kids in their homework and giving this kind of reply.